BIG DATA: Big Daddy of All Data is Big Data!!

Big data is a field that treats ways to analyze, systematically extract information from, or otherwise deal with data sets that are too large or complex to be dealt with by traditional data-processing application software.Characteristics of Big Data

“Any data which has four Vs i.e. Volume, Variety, Veracity and Velocity can be termed as Big Data”.

Below is a description of all the four Vs:

- Volume: Big Data implies that enormous amount of data is involved. Volume represents the amount of data and is one of the main characteristics that makes data “big”. This refers to the mass quantity of data that can be harness to improve decision making.

- Velocity: This characteristic represents the pace and steep at which data flows into the receiving system from various data sources

- Variety: Variety defines data of various types and from various data resources It can contain both structured and unstructured data.

- Veracity: This characteristic represents the trustworthiness of the data or the level of reliability associated with certain types of data.

2013 is predicted as the age of Big Data. and the vendors are ready with their tools sharpened to get and give that extra edge which might make all the differences.

[Reference: Click here to know more in detail about Big Data]

________________________________________________________________________________

This huge volume of data can be stored in a local system or across a distributed system. So let's first understand what is the difference between the two.

We will have silos of data pertaining to same group which otherwise can be called as information only if properly processed by various data governance and data management tool. The fact is there are so many of them. Some in small chunks small in bigger laying across the organization all ready to help you in boosting your sales and revenue but.

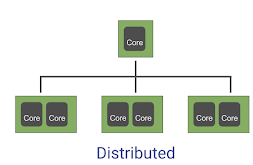

Local Vs Distributed

We can use a local system for storing data that can fit on a local computer on a scale of 0-32 GB depending on RAM.

1 local Machine can have multiple cores. A local process will use the computation resources of a single machine.

However if we have larger data set. That means instead of holding the data in-memory on ram we can move it onto a storage on sql database onto a hard drive instead of a a ram. Or we can use a cloud database using SQL Or we will need to use a distributed system, that distributes the data to multiple machines or computer.

In case of distributed architecture there will be a controlling core which will control the distribution across several parallel cores. A distributed process has access to the computational resources across a number of machines connected through a network.

It is easier to scale-out to many lower CPU machines than to try to scale-up to a single machine with high CPU and more ram.

A scenario where every thing is big. "The Big Picture Scenario"

Think about an enterprise which has large scale business millions of customers varied business domains.

Yes, there comes the 'but 'factor.

But you don't know which one to use, which one to rely on, which one to do the magic for you.

Technologists have answers for most of the problem that we face in today's world and to answer your prayer they came up with a special Algorithm to address the problems and challenges of Big Data. The challenges include capture, cleaning, storage, search, sharing, analysis and visualization.

Just like a shepherd cannot take care of an elephant, Big data is difficult to work with using relational databases and desktop statistics and visualization packages. The enormousness of data can only be realized with passing of time and growth of the business. Even the highest scalable software/hardware combo has it limit and after that we need a different approach. It's the time to call the mahout.

Just like a shepherd cannot take care of an elephant, Big data is difficult to work with using relational databases and desktop statistics and visualization packages. The enormousness of data can only be realized with passing of time and growth of the business. Even the highest scalable software/hardware combo has it limit and after that we need a different approach. It's the time to call the mahout.

There are several algorithms that are written to address this challenge. One of them being Hadoop.

Apache Hadoop [Reference Click here to know more in detail about Hadoop]]

Apache Hadoop is an open-source software framework that supports data-intensive distributed applications, licensed under the Apache v2 license. It supports the running of applications on large clusters of commodity hardware. The Hadoop framework transparently provides both reliability and data motion to applications.Let's take a look at all the component of Hadoop ecosphere

As we read above, Hadoop is a way to distribute very large files across multiple machines. It uses the Hadoop Distributed File System (HDFS). HDFS allows a user to work with large data sets. HDFS also duplicates block of data for fault tolerance. It also then uses Map Reduce and MapReduce allows computation on the data.

Distributed Storage - HDFS

Simple example of Distributed Storage - HDFS

In the above example of HDFS there is 1 Name node with CPU and RAM. and then three data node .

- HDFS will use blocks of data, with a size of 128 MB by default.

- Each of these blocks is replicated 3 times

- The blocks are distributed in a way to support fault tolerance or disaster recovery

- Smaller blocks provide more parallelization during processing

- Multiple copies of a block prevent loss of data due to a failure of a node

Map Reduce

Map reduce is a way of splitting a computation to a s distributed set of files (such as HDFS)It consists of a Job Tracker and multiple Task Trackers.

All said and done. It boils down to two main questions.

1. How is BigData projects actually been implemented in the Industry

2. How can we create a small project using BigData

Installing Hadoop, Hive & Hbase on macOS

Using Hadoop, the MapReduce framework can allow code to be executed on multiple servers a.k.a nodes removing the bottleneck of single machine performance.

Now we execute the Reduce job, which converts the tuple data from the Map job, and reduces the tuples to only contain unique keys while adding their values together.

# Part 2 - Reduce

# Our tuples

((Arya Stark, 1), (Daenerys Targaryen, 1), (Jon Snow, 1), (Arya Stark, 1), (Bronn, 1), (Sansa Stark, 1), (Tyrion Lannister, 1),(Bronn,1))

# Our reduced tuples

((Arya Stark, 1, 2), (Daenerys Targaryen, 2), (Jon Snow, 1), (Bronn, 1), (Sansa Stark, 1), (Tyrion Lannister, 1))

What we are left with is a reduced subset of our original data, with the number of times each unique key appears.

So we’ve taken the list of 8 GOT characters and reduced it down to 6 tuples.

Below is another visualization of MapReduce in the process, but instead of Person name, the dataset contains 9 letters:

# Example 2

[K, D, D, C, D, A, K, A, C]

Using the same MapReduce technique we can reduce our data to 4 tuples.

(A, 2), (D, 3), (C, 2), (K, 2)

Here is a visual example of the MapReduce process.

What we covered so far can be thought of in two distinct parts:

Next we will learn about the latest technology in this space known as Spark.

Kinshuk Dutta

New York

The Job Tracker is going to send codes to run on the Task trackers. And then the Task Trackers allocate CPU and memory for the tasks and monitor the tasks on the worker nodes.

All said and done. It boils down to two main questions.

1. How is BigData projects actually been implemented in the Industry

2. How can we create a small project using BigData

Example of Big Data Projects in Industry

There are many use-case examples , across multiple business domains such as healthcare, retail, financial services, manufacturing etc.

- Master Data Management or Data Consolidation from multiple sources into a “data lake”

- Predictive modeling/analytics

- Real-time analytics

Sample Big Data Project using HADOOP

In order to implement sample project in Big Data we will first start with learning and implementing individual components of Hadoop Ecosystem like MapReduce, Pig, Hive, Hbase, Spark etc.

Installing Hadoop, Hive & Hbase on macOS

MapReduce

MapReduce is a data processing job which splits the input data into independent groups, which are then processed by the map function and further reduced by recursive grouping of similar sets of data.Using Hadoop, the MapReduce framework can allow code to be executed on multiple servers a.k.a nodes removing the bottleneck of single machine performance.

Nodes can be grouped into clusters, dispersing processing and memory constraints, for faster access to datasets.

Here is an overview of how the MapReduce works.

# Sample Game of Thrones Dataset

# Looks like we have a few duplicates: Arya Stark and Bronn.

# While Daenerys Targaryen, Tyrion Lannister, Jon Snow, and Sansa Stark, all appear once.

Sample Data Set

First we execute the Map job, which will take the subsets of your data and isolates the entries in it. For each entry we assign a key -value pair, and create a tuple (k_i, v_i).

For each key (k_i)— our example contains GOT names — we assign the(v_i) as 1, because in our example we want to count the number of times the name appears.

# Part 1 - Map and subset

Arya Stark

Daenerys Targaryen

Jon Snow

Arya Stark

Bronn

Sansa Stark

Tyrion Lannister

Bronn

# Subset 1

Arya Stark → Key: 'Arya Stark’, Value: ‘1’

Daenerys Targaryen → Key: ‘Daenerys Targaryen’, Value: ‘1’

Jon Snow → Key: ‘Jon Snow’, Value: ‘1’

Arya Stark → Key: ‘Arya Stark’, Value: ‘1’

# Subset 2

Bronn → Key: ‘Bronn’, Value: ‘1’

Sansa Stark → Key: ‘Sansa Stark’, Value: ‘1’

Tyrion Lannister → Key: ‘Tyrion Lannister’, Value: ‘1’

Bronn → Key: ‘Bronn’, Value: ‘1’

Here is an overview of how the MapReduce works.

# Sample Game of Thrones Dataset

# Looks like we have a few duplicates: Arya Stark and Bronn.

# While Daenerys Targaryen, Tyrion Lannister, Jon Snow, and Sansa Stark, all appear once.

Sample Data Set

- Arya Stark

- Daenerys Targaryen

- Jon Snow

- Arya Stark

- Bronn

- Sansa Stark

- Tyrion Lannister

- Bronn

First we execute the Map job, which will take the subsets of your data and isolates the entries in it. For each entry we assign a key -value pair, and create a tuple (k_i, v_i).

For each key (k_i)— our example contains GOT names — we assign the(v_i) as 1, because in our example we want to count the number of times the name appears.

# Part 1 - Map and subset

Arya Stark

Daenerys Targaryen

Jon Snow

Arya Stark

Bronn

Sansa Stark

Tyrion Lannister

Bronn

# Subset 1

Arya Stark → Key: 'Arya Stark’, Value: ‘1’

Daenerys Targaryen → Key: ‘Daenerys Targaryen’, Value: ‘1’

Jon Snow → Key: ‘Jon Snow’, Value: ‘1’

Arya Stark → Key: ‘Arya Stark’, Value: ‘1’

# Subset 2

Bronn → Key: ‘Bronn’, Value: ‘1’

Sansa Stark → Key: ‘Sansa Stark’, Value: ‘1’

Tyrion Lannister → Key: ‘Tyrion Lannister’, Value: ‘1’

Bronn → Key: ‘Bronn’, Value: ‘1’

Now we execute the Reduce job, which converts the tuple data from the Map job, and reduces the tuples to only contain unique keys while adding their values together.

# Part 2 - Reduce

# Our tuples

((Arya Stark, 1), (Daenerys Targaryen, 1), (Jon Snow, 1), (Arya Stark, 1), (Bronn, 1), (Sansa Stark, 1), (Tyrion Lannister, 1),(Bronn,1))

# Our reduced tuples

((Arya Stark, 1, 2), (Daenerys Targaryen, 2), (Jon Snow, 1), (Bronn, 1), (Sansa Stark, 1), (Tyrion Lannister, 1))

What we are left with is a reduced subset of our original data, with the number of times each unique key appears.

So we’ve taken the list of 8 GOT characters and reduced it down to 6 tuples.

Below is another visualization of MapReduce in the process, but instead of Person name, the dataset contains 9 letters:

# Example 2

[K, D, D, C, D, A, K, A, C]

Using the same MapReduce technique we can reduce our data to 4 tuples.

(A, 2), (D, 3), (C, 2), (K, 2)

Here is a visual example of the MapReduce process.

Installing PIG on macOS

I use HomeBrew to install PIG

$ brew install pig

Updating Homebrew...

==> Auto-updated Homebrew!

Updated Homebrew from 718a43517 to 2aefcb37c.

Updated 3 taps (homebrew/cask-versions, homebrew/core and homebrew/cask).

==> Updated Formulae

node ✔ fonttools pdftoipe poppler sipsak step unrar

diff-pdf joplin plantuml sbcl sonobuoy swig vulkan-headers

docker-credential-helper mosquitto pmd shpotify sourcekitten txr youtube-dl

==> Downloading https://www.apache.org/dyn/closer.cgi?path=pig/pig-0.17.0/pig-0.17.0.tar.gz

==> Downloading from http://us.mirrors.quenda.co/apache/pig/pig-0.17.0/pig-0.17.0.tar.gz

######################################################################## 100.0%

🍺 /usr/local/Cellar/pig/0.17.0: 182 files, 204.5MB, built in 28 seconds

- Using HDFS to distribute large data sets

- Using MapReduce to distribute a computational task to a distributed data set

Next we will learn about the latest technology in this space known as Spark.

Kinshuk Dutta

New York

Thanks for this post!

ReplyDeleteYou are welcome!

ReplyDelete